Data engineering is the foundation that enables organizations to leverage data effectively. Data engineers are responsible for designing, building, and managing the infrastructure and architecture needed to store, process, and analyze large volumes of data. As data continues to grow exponentially, data engineers have become highly sought-after roles across industries.

Some key responsibilities of a data engineer include:

Data Architectures and Infrastructure: Data Engineers

- Designing and implementing reliable data pipelines using ETL processes to move data from various sources into storage repositories

- Building scalable data warehouses and data lakes on platforms like SQL, NoSQL, and Hadoop

- Ensuring optimal data processing performance using technologies like Apache Spark for big data workloads

- Integrating siloed data sources into unified systems

Data Engineers: Programming Languages

- Writing code and scripts using languages like Python, R, and SQL to transform, model, and process data

- Building data pipelines, ETL logic, and applications using programming languages

- Automating repetitive data tasks through scripting

Cloud Computing Platforms

- Leveraging managed cloud platforms like AWS, Azure, and Google Cloud Platform for secure and scalable data infrastructure

- Implementing data solutions on cloud versus on-premises infrastructure

Analytics and Business Intelligence

- Serving processed data to drive analytics and business intelligence through BI tools like Tableau

- Providing self-service access to data for end users through APIs and interfaces

A data engineer’s work serves as the critical foundation for key data-driven functions like analytics, reporting, AI and machine learning. As data’s value grows, skilled data engineers will continue to be integral to extracting maximum utility from data assets. Organizations are investing heavily in data capabilities, with data engineer roles being a top priority.

Data Architectures and Infrastructure

The core of a data engineer’s responsibilities involves designing, building and supporting the fundamental data infrastructure that enables the capture, storage, processing and usage of data at scale. This includes key architectural components like:

Data Pipelines and ETL

- Implementing ETL (extract, transform, load) pipelines that automatically move data from a variety of transactional systems and data sources into data repositories

- Ensuring consistent data flows with efficient transformation logic to prepare data for downstream usage

- Scripting routine extraction from source databases and applications using change data capture

| Stage | Description | Tools |

| Extract | Pull data from sources | Kafka, File APIs |

| Transform | Cleanse, shape data | Python, Spark |

| Load | Insert data into targets | SQL, NoSQL |

Data Warehouses and Data Lakes

- Building structured data warehouses optimized for analysis using SQL or columnar storage

- Designing data lakes on cost-efficient object stores to land raw data processed via Spark

Hadoop and NoSQL Databases

- Storing mass volumes of unstructured data in distributed systems like Hadoop and big data technologies

- Implementing flexible NoSQL databases like MongoDB and Cassandra to handle diverse data

Apache Spark and Big Data Tech

- Moving data engineering beyond MapReduce with faster in-memory systems like Apache Spark

- Utilizing open-source big data tech like Kafka, Flink, Druid, and Hive at scale

The data architect role focuses on balancing key technical considerations like latency, throughput, and tooling with business needs for reliability, accessibility and performance when using data.

Programming Languages

Data engineers rely heavily on coding skills to execute the construction and orchestration of data pipelines, applications, and analytics systems. Fluency across key programming languages and environments enables rapid development and deployment.

Python

- Python has emerged as the dominant language for data engineering tasks due to its flexibility, scalability and vast libraries

- Python powers key parts of the modern data stack, including ETL, data analysis, and infrastructure automation

- Python frameworks like Pandas, NumPy and SciPy are optimized for ad-hoc data tasks

python

# Example ETL Pipeline in Python

import pandas as pd

from sqlalchemy import create_engine

# Extract data from CSV

data = pd.read_csv('data.csv')

# Transform data

data = transform(data)

# Load data to PostgreSQL

engine = create_engine('postgresql://localhost:5432/mydb)

data.to_sql('my_table', engine)R

- R brings robust statistical capabilities and visualization to data tasks, complementing Python’s general-purpose strengths

- Tidyverse and other R packages create a powerful toolkit for exploring, modelling and parsing data

- R integrates tightly with notebook IDEs like RStudio for experimentation

SQL

- SQL remains the standard for querying, manipulating and defining database systems, whether on-premises or in the cloud

- ANSI SQL ensures portability across data warehouses like Snowflake, Azure Synapse Analytics and Google BigQuery

- Procedural SQL expands possibilities for orchestration, programming and automation inside the database

The combination of Python + R + SQL forms a flexible, production-ready data language stack adaptable to a wide range of data scenarios with minimal overhead.

Cloud Computing Platforms

Cloud platforms have become the dominant infrastructure for building modern data solutions. Managed services greatly simplify deploying and scaling data pipelines, warehouses, lakes, and

analytics systems. Core cloud providers include:

AWS

- AWS offers an unmatched breadth of data services, including Redshift, EMR, Athena, Quicksight and SageMaker

- Fully managed offerings reduce overhead vs administering open source on EC2

- Serverless options enable pay-per-use data processing with Lambda and Glue

Azure

- Azure brings strong integration with the Windows ecosystem and support for .NET languages

- Azure Synapse Analytics combines data warehousing and big data analytics

- Azure Databricks provides a collaborative Spark environment

Google Cloud Platform

- Google Cloud Platform (GCP) delivers data tools like BigQuery, Dataflow, and Dataproc

- Deep learning capabilities with Vertex AI, TensorFlow and TPUs

- Fully managed public cloud with a global network

| Cloud | Pros | Cons |

| AWS | Broadest capabilities, largest community | Can have complex pricing |

| Azure | Tight Windows/Office integration | Less big data features than AWS |

| GCP | Strong analytics and ML offerings | Smaller market share than AWS/Azure |

Choosing a platform involves balancing factors like capabilities, budget, team skills, and compliance requirements. Multi-cloud deployments are also common to prevent vendor lock-in. Cloud data engineers must stay cross-platform fluent.

Analytics and Business Intelligence

A key objective of building data infrastructure is enabling analytics and business intelligence that drive smarter decisions. Data engineers play a central role in making data consumable.

Analytics Tools

- Connect and model data in visual tools like Tableau for interactive analysis

- Build dashboards and reports with drill-down showing key metrics and KPI trends

- Embed statistical summaries like cohort analysis into BI using Python and R

Business Intelligence Platforms

- Implement data warehouse schemas to support BI using star/snowflake dimension modelling in tools like SQL Server BI, SAP BusinessObjects, and Qlik

- Build ETL processes to populate BI platforms nightly, ensuring fast querying

- Manage BI metadata like hierarchies, attributes, and data category tags

Dashboards and Reporting

- Provide business users self-service access to data through SQL querying or simple drag-and-drop UIs

- Buildaggregated views of KPIs using database constructs like pre-computed summary tables

- Map web analytics event data into structures supporting customer journey analysis

The focus is crafting structured data experiences focused on insights, not just storage. Clean, consistent data with coverage of the requisite dimensions and facts to answer business questions. Data is enough to inform decisions. Metadata for discoverability. The data engineer mindset balances usability with performance and scale behind the scenes.

Data Modeling and Management

Data engineers don’t just move data around; and they have to model it for usability. Key responsibilities span:

Collecting Quality Data

- Identify relevant sources of raw input data across a company’s tech landscape

- Build adapters and connectors pulling batch or streaming data from APIs and event logs

- Profile ingested data, enforcing schemas, validating values, and addressing issues

Transforming and Cleansing

- Shape extracted data to serve downstream needs using normalization

- Handle missing values and correct inaccuracies

- Enrich data by merging related attributes from other systems

Structuring and Managing

- Logically organize related data attributes into relational constructs

- Implement efficient physical database designs balancing storage, performance and maintenance

- Associate technical metadata to enable discovery and governance

| Data Model | Description |

| Conceptual | High-level logical entities and relationships |

| Logical | Database schema specifying tables, keys, attributes |

| Physical | Actual database implementing logical model |

Getting usable pipelines of quality data requires carefully applying structure. Data modelling provides frameworks, while data management executes governance, ensuring integrity, documentation, and business alignment.

You Must Read How Nonprofit Data Analytics Boosts Efficiency and Results in 2024 The Ultimate Data Analytics Quiz – 25 Questions to Supercharge Your Skills: Boost Your Knowledge Pathstream Data Analytics Mastery: 10 Strategies for Success

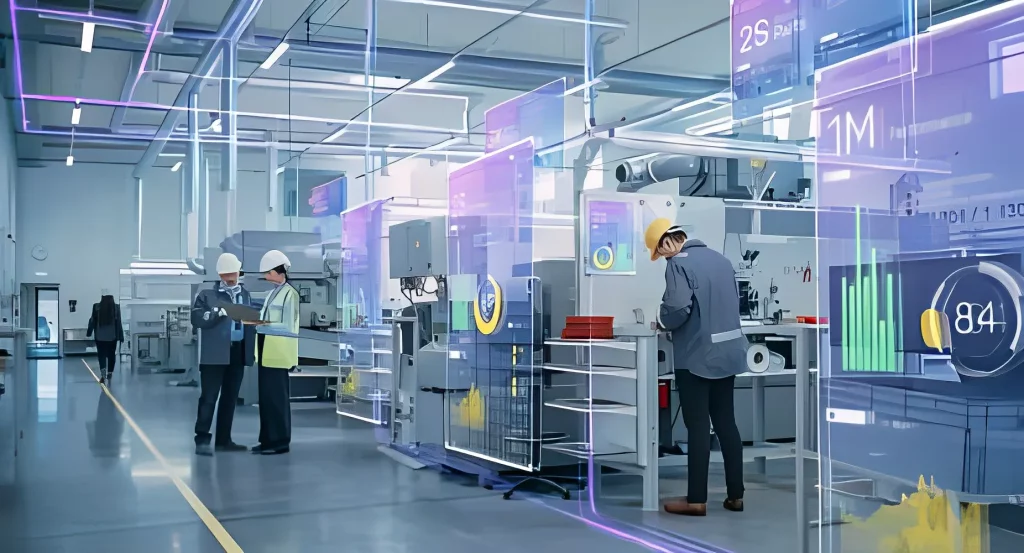

Automation and Optimization

As custodians of expansive data pipelines and architectures, a key priority for data engineers is creating self-healing, optimized systems via process automation. Automating repetitive tasks and operational workflows boosts efficiency for engineers while improving monitoring and reliability organization-wide.

Automating Key Processes

Tasks prime for automation include:

- Scheduling ETL processes into data destinations

- Triggering reprocessing of flawed data

- Monitoring data lake and database status

- Generating reports from data warehouses

- Executing reoccurring SQL queries and scripts

This shifts engineers to higher-value work while minimizing gaps and latency through seamless background automation.

Infrastructure Optimization

Optimization focuses on:

- Maximizing pipeline capacity for surging big data

- Achieving compliance and security policies

- Streamlining ETL and queries through indexes and partitions

- Establishing redundancy in core architecture

- Ingest optimization for Spark, Hadoop, etc.

Plus continual tuning as technologies and demands evolve over time.

Key Optimization Strategies

| Automation Area | Common Approaches |

| Platform Scaling | Add read replicas, pods, containers |

| Monitoring | Error logging, notifications, visualization |

| Latency Reduction | Minimize transformation steps, compress data |

| Cost Efficiency | Downsize unused resources on cloud platforms |

| Data Governance | Schema enforcement, de-identification, backup policies |

Well-designed automation liberates engineers from mundane yet mission-critical responsibilities to innovate higher value capabilities, advancing org-wide analytics maturity.

Establishing an Optimization Culture

- Encourage suggestions from across tech teams interfacing with data

- Pilot proposed optimizations on lower environments first

- Collect metrics on key performance indicators

- Monitor impacts before scaling changes

Foster a culture of healthy continual optimization aligned to overarching data strategy.

With exponential data growth across industries, crafting optimized behind-the-scenes automation is imperative to smoothly fuel analysis needs both now and in the future.

Advanced Applications

Beyond foundational data management, data engineers work cross-functionally, enabling advanced applications like business intelligence, data science, and machine learning. Architecting robust pipelines, warehouses and lakes provides the raw material for driving transformative organizational outcomes through analytics.

Enabling Business Intelligence

Data visualization, predictive modelling, benchmarking performance – business intelligence unlocks countless capabilities. Data engineers:

- Build foundations for analytics tools through ETL into centralized data sets

- Create reusable models, measures and dimensions

- Develop scalable infrastructure sustaining future growth

- Ensure smooth integrations between reporting tools, databases and warehouses

Their work powers actionable insights.

Supporting Data Science

For analyzing data and communicating findings, data scientists rely on data engineers providing:

- Quick, simple access to clean datasets

- Compute resources for statistical analysis

- Flexible pipeline changes as experiments evolve

- Tools and languages like Python, SQL, and R for modelling

- Visualization platforms to present discoveries

This simplifies exploration and discovery.

Driving Machine Learning

Training machine learning algorithms depend on massive datasets. Data engineers support model development by:

- Building scalable big data infrastructure on cloud platforms

- ETL processes that label, transform and shuffle data for ML

- Scripting data simulations and scenario testing methods

- Serving models at scale with low latency response

- Monitoring models and retraining pipelines

Their work is integral to operationalization.

The applications are endless, but so are the technical complexities. Cross-team engagement and creativity help data engineers continually push boundaries to deliver analytics excellence.

Certifications and Skills Development

To remain competitive, data engineers must continuously develop expertise as practices and technologies rapidly evolve. Whether building skills in emerging areas like machine learning pipelines or earning sought-after certifications, staying at the leading edge is imperative.

Let’s explore top knowledge areas to master along with credentialing programs to validate abilities.

Key Technology Competencies

Priority skills span both infrastructure and analytics:

- Cloud platforms – AWS, Azure, Google Cloud

- Distributed systems – Hadoop, Apache Spark, Kafka

- Storage solutions – SQL, NoSQL, column-based

- Containerization – Docker, Kubernetes

- Streaming and events – Kafka, Apache Beam

- Machine Learning – TensorFlow, PyTorch, SageMaker

Plus continually learning new tools and languages like Python, R, and Go appearing across the data stack.

Relevant Data Engineering Certifications

Formal certification can boost expertise while showcasing capabilities to employers. Top programs include:

- Cloudera Certified Associate Data Engineer

- AWS Certified Data Analytics Specialty

- Google Professional Data Engineer

- Microsoft Certified Azure Data Engineer Associate

- IBM Certified Data Engineer

Many offer self-paced online training to prepare for exams. Determine priority vendors to focus efforts.

Cultivating a Growth Mindset

With so much change, having a learning-oriented mindset helps.

- Read blogs and industry sites daily

- Take online courses to gain exposure

- Attend conferences to hear the latest advances

- Experiment with new open-source projects

- Practice continually through side datasets

Make learning itself part of your regular routines.

The mix of emerging priorities and formal credentials keeps data engineers indispensably at the nexus of analytics innovation. Lean into the challenge!

Career Paths and Trajectories

Data engineering offers a rewarding career combining technical skills, creativity, and cross-functional impact. As data permeates all industries, opportunities expand rapidly. Whether just starting out or looking to advance, many potential trajectories exist in this flourishing field.

Entry-Level to Management

Typical career evolution includes:

- Data Analyst – Entry-level, focus on reporting/visualization

- ETL Developer – Specialize in data movement/transformations

- BI Engineer – Expand into enterprise data warehousing

- Data Engineer – Architect core data infrastructure

- Principal/Architect – Strategic direction, data governance

- Data Manager – Lead a team of data engineers

Many blend technical and managerial responsibility as they progress.

Comparison to Data Scientists

Data engineers vs data scientists:

- Data Engineers – Build, operationalize, and scale data pipelines and architecture for reliability and accessibility.

- Data Scientists – Apply advanced analytics, statistical modelling and machine learning algorithms to derive insights.

There is strong collaboration between the roles, creating symbiotic value.

Growth Trajectory

The future looks bright, according to the U.S. Bureau of Labor Statistics:

- Demand for data engineers will increase by 25% from 2016 to 2026

- That is over twice the average growth rate for other tech jobs

- High demand stems from digital transformation across industries

Salaries also reflect demand with competitive compensation.

Sample Data Engineering Salaries

| Experience | Average Salary | Highest Salaries |

| 0 – 1 years | $68,500 | $121k |

| 2 – 4 years | $88,000 | $148k |

| 5+ years | $117,000 | $180k |

Adjusted for cost of living, data engineers rank among the top-paying tech careers.

Between strong demand forecasted, abundant career growth opportunities, and lucrative salaries – data engineering delivers on many dimensions. Bring your passion and demonstrate impact for a bright future!

Conclusion

The data engineer‘s success blueprint involves a dynamic interplay of technical skills, adaptability, and a growth-oriented mindset. As the data landscape evolves, these professionals, armed with their expertise, will continue to be at the forefront of driving innovation, making informed decisions, and shaping the future of data-driven enterprises. Embrace the challenge, continue learning, and bring your passion to the table — a bright future in data engineering awaits!